Some kind of mushroom on the edge of a log in Jedediah Smith State Park

Some kind of mushroom on the edge of a log in Jedediah Smith State Park

Howland Hill Rd in Jedediah Smith State Park

Mill Creek near Howland Hill Road in Jedediah Smith State Park

A view of the Pacific Ocean from the top of the Trees of Mystery aerial tramway

Klamath Beach a little before sunset

Redwood National Park (Prairie Creek State Park)

I bought an electric car last month, which got me interested in my electric bill. I was surprised to find out that my electric company lets you export an hour-by-hour usage report for up to 13 months.

There are two choices of format: CSV and XML. I deal with a lot of CSV files at work, so I started there. The CSV file was workable, but not great. Here is a snippet:

"Data for period starting: 2022-01-01 00:00:00 for 24 hours"

"2022-01-01 00:00:00 to 2022-01-01 01:00:00","0.490",""

"2022-01-01 01:00:00 to 2022-01-01 02:00:00","0.700",""

The “Data for period” header was repeated at the beginning of every day. (March 13, which only had 23 hours due to Daylight Saving Time adjustments, also said “for 24 hours”.) There were some blank lines. It wouldn’t have been hard to delete the lines that didn’t correspond to an hourly meter reading, especially with BBEdit, Vim, or a spreadsheet program. But I was hoping to write something reusable in Python, preferably without regular expressions, so I decided it might be easier to take a crack at the XML.

Here is the general structure of the XML:

<entry>

<content>

<IntervalBlock>

<IntervalReading>

<timePeriod>

<duration>3600</duration>

<start>1641024000</start>

</timePeriod>

<value>700</value>

</InvervalReading>

</IntervalBlock>

</content>

</entry>

Just like the CSV, there is an entry for each day, called an IntervalBlock. It has some

metadata about the day that I’ve left out because it isn’t important. What I care about

is the IntervalReading which has a start time, a duration, and a value. The start time

is the unix timestamp of the beginning of the period, and the value is Watt-hours.

Since each time period is an hour, you can also interpret the value as the average

power draw in Watts over that period.

XML is not something I deal with a lot day to day, so I had to read some Python docs, but it turned out very easy to parse:

from xml.etree import ElementTree

from datetime import datetime

import pandas as pd

import matplotlib.pyplot as plt

ns = {'atom': 'http://www.w3.org/2005/Atom', 'espi': 'http://naesb.org/espi'}

tree = ElementTree.parse('/Users/nathan/Downloads/SCE_Usage_8000647337_01-01-22_to_12-10-22.xml')

root = tree.getroot()

times = [datetime.fromtimestamp(int(x.text))

for x in root.findall("./atom:entry/atom:content/espi:IntervalBlock/espi:IntervalReading/espi:timePeriod/espi:start", ns)]

values = [float(x.text)

for x in root.findall("./atom:entry/atom:content/espi:IntervalBlock/espi:IntervalReading/espi:value", ns)]

ts = pd.Series(values, index=times)

The ns dictionary allows me to give aliases to the XML namespaces to save typing.

The two findall commands extract all of the start tags and all of the value tags.

I turn the timestamps into datetimes and the values into floats. Then a make them into

a Pandas Series (which, since it has a datetime index, is in fact a time series).

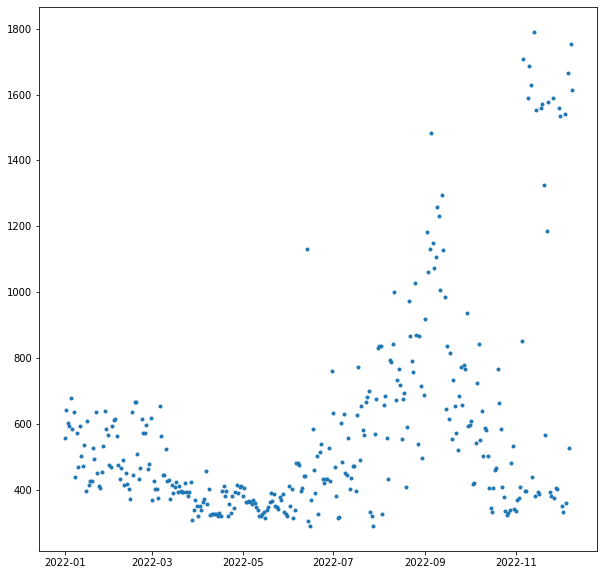

My electricity is cheaper outside of 4-9 p.m., so night time is the most convenient time to charge. I made a quick visualization of the last year by restricting myself from midnight to 4:00 a.m. andtaking the average of each day. Then I plotted it without lines and with dots as markers:

plt.plot(ts[ts.index.hour<4].groupby(lambda x: x.date).mean(), ls='None', marker='.')

As expected, you see moderate use in the winter from the heating (gas, but with an electric blower). Then a lull for the in-between times, a peak in the summer where there is sometimes a bit of AC running in the night, another lull as summer ends, and then a bit of an explosion when I started charging the car.

For now, I am actually using a 120 V plug which can only draw 1 to 1.5 kW and is a slow way to charge a car. Eventually I will get a 240 V circuit and charger, increase the charging speed 5x, and have even bigger spikes to draw.

Hermosa Beach, California

Two trees

Hermosa Beach

Santa Monica Bay looking north from Hermosa Beach

Nursery under the power lines, Redondo Beach, California

I’m back from a blogging hiatus for a quick complaint about the sorry state of Amazon’s account system, especially when it comes to households and minors.

Everything that follows is to the best of my knowledge, and only includes the features I actually use.

A regular Amazon account can be used for shopping, Kindle, and Prime Video (among other things). You can have a maximum of two regular Amazon accounts in a household, and they can share Prime shipping benefits and Kindle purchases, but not Prime Video. However, under the primary member’s Prime Video login, you can have sub-profiles to separate household members.

On a Kindle device, you can share ebook purchases with minors using Amazon Kids. This is not a true Amazon or Kindle account, but a sub-account within a regular Amazon account. That is, you sign into the Kindle with the parent’s account and then enter Kid Mode. All purchases (or library check-outs) must be made on the parent’s account and then copied over to the child’s library using a special Amazon dashboard.

Note that Amazon Kids+ is a different product: it is basically Kindle Unlimited for Amazon Kids accounts. I have used it and I think the selection is terrible. For example, they love to carry the first book of a series but not the remainder of the series. Also, when I last used it, there was no way to know which books are available through Amazon Kids+ short of searching for the book on a kid’s device.

There is a shopping feature called Amazon Teen. This is essentially a regular Amazon account, but it is linked to a parent’s account, and purchases are charged to the parent’s card, with the option to require purchase-by-purchase approval from the parent. This is a way to share Prime shipping features with a teenager, and the only way to share Prime shipping with more than a single person in your househould. Crucially, Amazon Teen accounts cannot purchase Kindle books, log into a Kindle device, or share Kindle purchases with the parent’s account.

Until now, I have mostly survived in the Amazon Kids world, despite the friction involved in getting a book onto a kid’s device. My kids have mostly adapted by ignoring their Kindles and reading books in Libby on their phones. This isn’t a good fit for my teen and tween, who need to read books at school. They are not allowed to use phones at school, but are allowed to use e-ink Kindles.

Everything came to a head this weekend, when I tried to make them both Amazon Teen accounts, which are useful in their own right. (The current practice is that they text me an Amazon link when they need something, and it will be nice for them to be a little be more self-sufficient.) This was before I knew that Amazon Teen accounts couldn’t buy Kindle books (why?), so I then attempted to create them each a second account, not linked to mine in any way, for Kindle purposes.

That is when things came to a screeching halt, but this is at least partially my fault. While I had been looking into this, I was downloading Kindle books to my computer using a Keyboard Maestro script that simulated the five clicks required for each download. I’m pretty sure that this triggered some robot-defensive behavior from Amazon, which made it impossible for me to create an account without a cell phone attached to the account. But all of our household phone numbers are already attached to other accounts, and attempting to remove them put me into an infinite loop of asking for passwords and asking for OTPs.

I eventually solved this problem in two different ways. One involved talking to a human at Amazon’s tech support, which I admit is better than many of the other tech companies at solving this kind of problem. The other involved a VPN, which seems to have freed me from bot-suspicion.

But in the end, I also put in an order for a Kobo. I’m told they can sync directly with Libby for library checkouts, unlike Amazon which requires a complex multi-click dance which might prevent my kids from using their Kindles even if I do get their accounts squared away. And these are the last major micro-USB devices in the house, so maybe the time has come to move on. Ironically, the only way I could find a Kobo that shipped in less than a week was to buy it from Amazon.

Shell Beach, California